Apple AI

A significant fad has taken over Silicon Valley -- Artificial Intelligence. Even if Apple hasn't mentioned the controversial term 'AI' during a keynote, the technology is all over the company and is often referred to as machine learning or ML. Many think Apple is behind in machine intelligence, but we're here to tell you Apple AI is already here.

Quick Links

● Siri

● Apple Car

● Apple Silicon

● Privacy

● John Giannandrea

Page last updated:

Get Apple News Directly in Your Inbox

There's a reasonably good chance Apple will never refer to anything as "Artificial Intelligence" or AI in marketing, but the true meaning of the buzzword, Machine Learning, is all over the company. Rumors are flying that Apple is working on its own internal chatbot similar to ChatGPT, but that's only the tip of the iceberg.

Apple has been working on machine learning tools for its devices for years, an early example being how its touchscreen keyboard worked on iPhone. It used algorithms to determine what letter a person meant to hit when typing and would learn based on each user.

That keyboard feature evolved into an advanced predictive autocorrect system that now uses a transformer language model in iOS 17. Several other systems on Apple's platforms benefit from machine learning.

A note on terminology and Apple AI

We will refer to Apple's advanced machine learning efforts as "AI" or Artificial Intelligence to keep things simple. Apple doesn't directly refer to this term in its operating systems, services, or feature descriptions, but it is used by the general public when discussing computer-generated content.

Apple tends to avoid using industry terms to distance its technologies from competitors. For example, AR and VR were not mentioned during the Apple Vision Pro demo. Instead, Apple called it Spatial Computing.

Apple AI is used as a catch-all term in this piece for anything related to machine learning, transformer technology, generative AI, and other systems being developed by the company. The term is not official by any stretch.

Siri Intelligence and AppleGPT

Siri is one of Apple's original machine learning models. It was acquired by Apple when it purchased the company SRI.

In its most basic form, Siri is a decision tree that translates verbal commands into yes/no variables until it reaches a solution. At least, that's how it generally operated at its inception.

Siri is much more advanced today and can determine information on a more general scale. Different command sequences can trigger various events, but there is room for improvement, given how quickly competitors like chatGPT and Bard have grown.

Apple began referring to something called "Siri Intelligence" as a catch-all term for many machine learning algorithms occurring on devices. It is what surfaces calendar events from an email or suggests which apps to use based on the time of day.

This may seem rudimentary by today's standards, but it was quite the feature when it first rolled out. Now, Apple treats Siri as part of the operating system instead of just a virtual assistant.

It isn't clear what Apple has planned for Siri. An internal team was rumored to be working on a more intelligent voice assistant for Apple Vision Pro, but it was allegedly squashed in favor of Siri.

Another rumor states that Apple is testing chatGPT-like technology and is even being referred to as AppleGPT internally. While such a tool may never reach the public, it may be the first step to a real AppleGPT or smarter Siri.

Rumors of a secret Swiss "Zurich Vision Lab" suggest Apple has a dedicated facility for developing AI, ML, computer vision, and more advanced Siri models. It is likely what developed Apple Vision Pro and the technology surrounding it.

In February 2023, Apple held its first annual AI summit to discuss advances in AI and ML — a clear indication of Apple's dedication to the field. However, it appears the first summit was just a team-building exercise.

Apple Silicon and the Neural Engine

Another clear indicator of work on Apple AI is Apple's Neural Engine. It first appeared on the iPhone's A11 Bionic processor and was used to process things like Face ID and camera actions.

The first Neural Engine was capable of performing 600 billion operations per second. That has since expanded to 31.6 trillion operations per second in the M2 Ultra.

The Neural Engine is required to process large amounts of data instantly and enables advanced photography algorithms and features like Portrait Mode.

The advancements of Apple's Neural Engine and the introduction of the M1 processor proved that Intel just wasn't going to cut it anymore. Apple AI processes can operate significantly faster on Apple Silicon thanks to the Neural Engine.

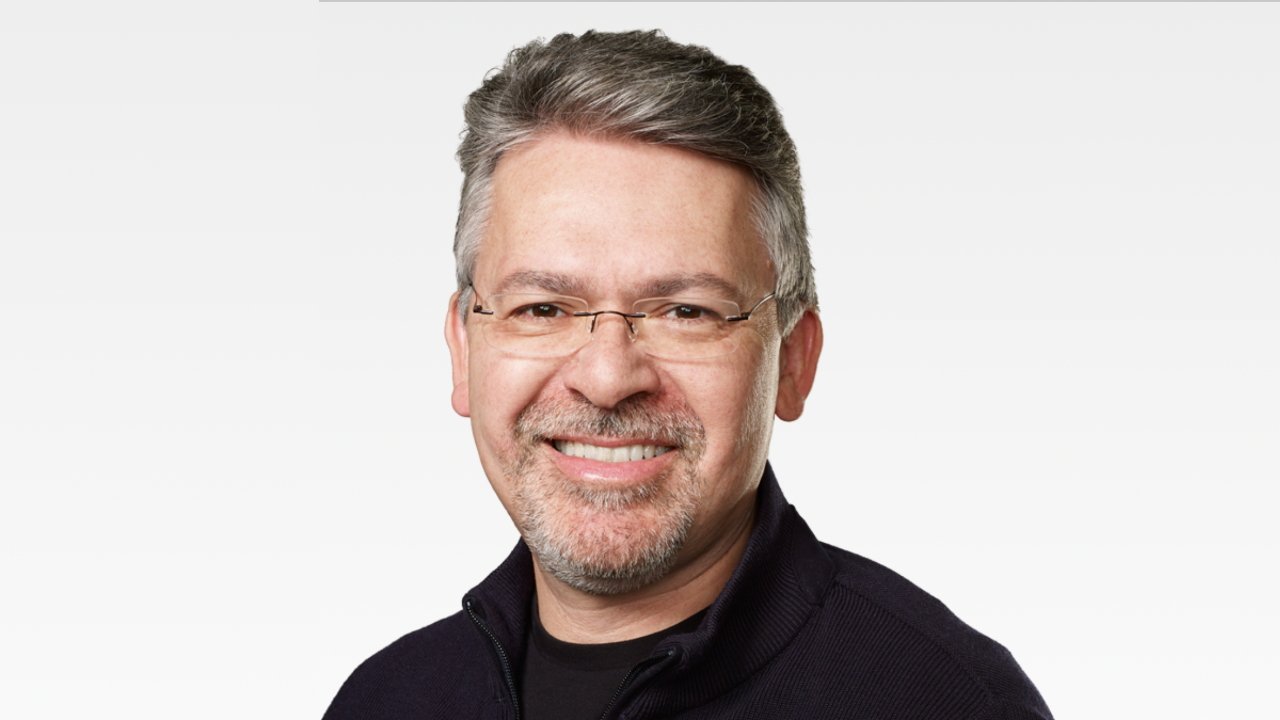

John Giannandrea

One of the few places you can find a mention of AI on Apple's website is in John Giannandrea's job description. He's Apple's Senior Vice President of Machine Learning and AI Strategy.

He reports directly to Apple CEO Tim Cook and has worked at the company since 2018. His bio says he oversees strategy for AI and ML across the company and the development of Core ML and Siri.

"John hit the ground running at Apple and we are thrilled to have him as part of our executive team," Cook said after hiring Giannandrea. "Machine learning and AI are important to Apple's future as they are fundamentally changing the way people interact with technology, and already helping our customers live better lives. We're fortunate to have John, a leader in the AI industry, driving our efforts in this critical area."

Apple didn't hire Giannandrea by mistake — his eight years at Google were spent developing advanced AI systems. And one of his foundational principles for developing Apple AI is privacy.

"I understand this perception of bigger models in data centers somehow more accurate," he said in an interview, "but it's actually wrong. It's better to run the model close to the data rather than moving the data around."

That has led to Apple's push for more private on-device actions.

Privacy as a service

OpenAI's chatGPT, Google's Bard, and Facebook's Llama all have one thing in common — massive data stores built by collecting user data. This strategy has led these products to have an incredible understanding of people and how they think right out of the gate.

Apple's approach to developing with privacy in mind is seen as a hindrance for AI rather than a boon. The consensus seems to be that more data means better algorithms.

However, Apple is ahead of the curve in silicon development and on-device training. Its restrictive data sets seem to have forced the company to think differently about how to train Apple AI, which could lead to a better model.

Apple's competitors suck in every piece of information on the internet to feed their models, leading to a phenomenon called model collapse. That means these large language models are actually losing their "intelligence" the more they feed on their own output.

The so-called Apple AI likely won't suffer from model collapse because it won't have access to all the data on earth, including its own outputs. That means a healthier algorithm and less interference from non-human data.

Apple will likely have complete control over whatever feeds its models.

The future of Apple AI

Stories have cropped up in recent years about how Apple has fallen behind in developing AI. Some even suggest Apple hasn't even started, given its lack of a public chatbot.

However, all evidence points to the contrary. Apple has been at the forefront of machine learning and other computer intelligence models for the past decade or more.

What's next for Apple AI is anyone's guess. It doesn't seem likely that Siri will become a chatbot that hallucinates when asked pointed questions, but that doesn't mean Apple isn't going to use LLM, GPT, or other tools.

Apple didn't utter the phrase "AI" once during WWDC, opposite Google, which used the term over 100 times during I/O. But Apple AI was all over that developer conference, especially in visionOS.

Even iOS 17 has a key piece of technology being used to develop these popular chatbots — a transformer language model. It's being used for autocorrect, which has proven spectacular in the betas.

Apple Car

Apple's worst-kept secret is Project Titan, an autonomous vehicle project. While we've taken to calling this secret project Apple Car, it isn't clear if Apple will actually ever release a vehicle.

That said, the autonomous systems being developed by Project Titan will need a brain. That's where Apple AI comes in.

Everything Apple has developed around machine intelligence seems to be leading to Apple Car. A lot of technology introduced for Apple Vision Pro would work great on a vehicle after being scaled up.

Getting these systems to coordinate in real-time, detect objects, understand user commands, and generate feedback all at once is a job for an advanced Neural Engine. A report in 2021 suggested that Apple had completed such a chip and would begin testing it.

While Apple's hope to develop a self-driving vehicle may be in the rearview mirror for now, all of the work to create advanced computational systems wasn't for nothing. Each bit of progress on one product aids the progress of another.

It seems all roads lead to Apple AI.

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

Evan Selleck

Evan Selleck

William Gallagher

William Gallagher