visionOS

visionOS is Apple's new platform for spatial computing. It powers the software running on Apple Vision Pro and will be the focal point of Apple's future products in the space.

● Spatial computing operating system

● An infinite spatial canvas available in AR and VR modes

● 3D apps and content viewable in windows and spaces

● Apps built with SwiftUI and RealityKit

● iPad and iPhone apps work with little developer intervention

● Eye and hand controls

● Supports physical keyboards, trackpads, and game controllers

Page last updated:

Get Apple News Directly in Your Inbox

Apple announced Apple Vision Pro during WWDC 2023 and an operating system to go with it — visionOS. It is Apple's first platform built for spatial computing.

Instead of building an isolating VR headset focused on transporting users to other worlds or a set of limited AR glasses that overlays apps on the real world, Apple chose a mixture of both. Primarily, visionOS is a mixed reality operating system that can adapt to the user's needs, be it complete isolation or social-friendly augmented computing.

The minimal previews for visionOS showed an operating system that had 2D and 3D elements based on Apple's existing operating system design. Transparent windows, icons with dimension, and objects that cast shadows attempt to blend digital with reality.

Apple Vision Pro is Apple's first product to run visionOS, but it won't be the last. Rumors expect it will eventually power a set of AR glasses that's been dubbed Apple Glass.

visionOS: going spatial

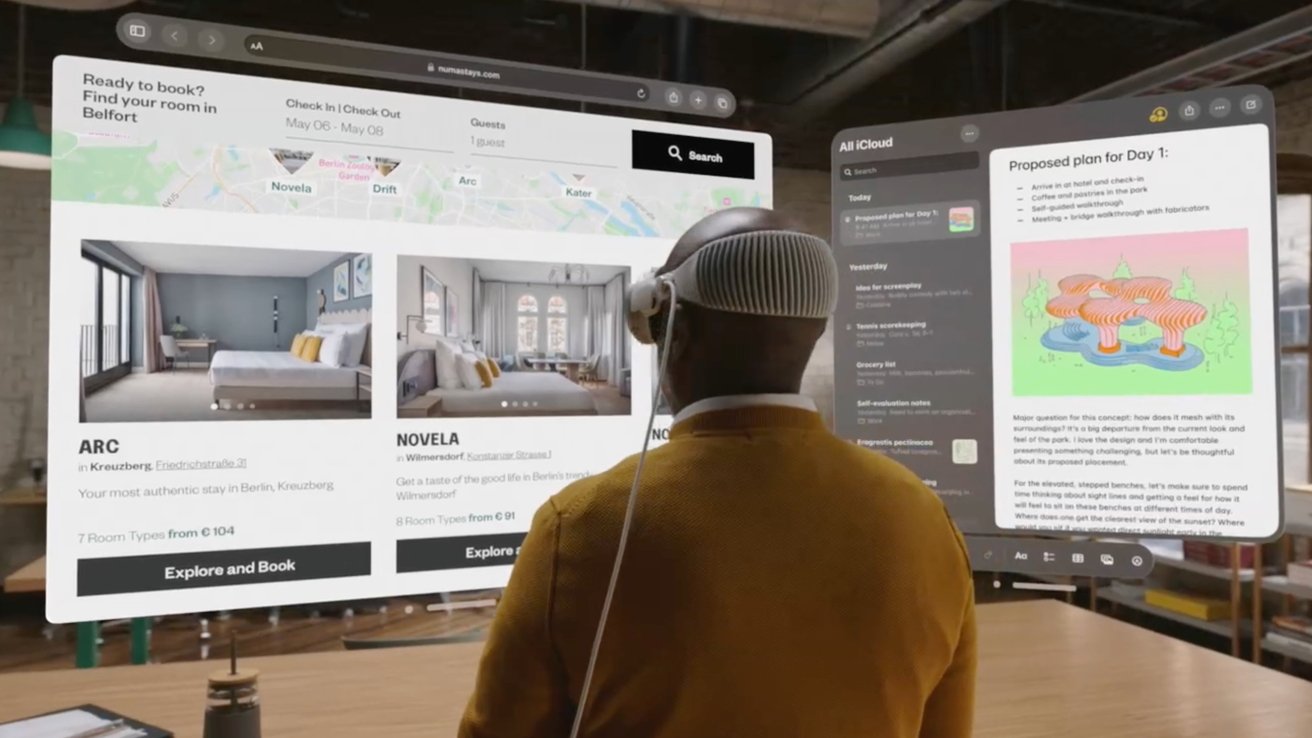

visionOS provides users with an infinite canvas to place apps in a 3D space. Apps can expand to take over a space and bring in 3D objects and video.

Design

Existing apps like Safari will show up as a 2D window floating in the air. This is also how iPad and iPhone apps will show up in the operating system.

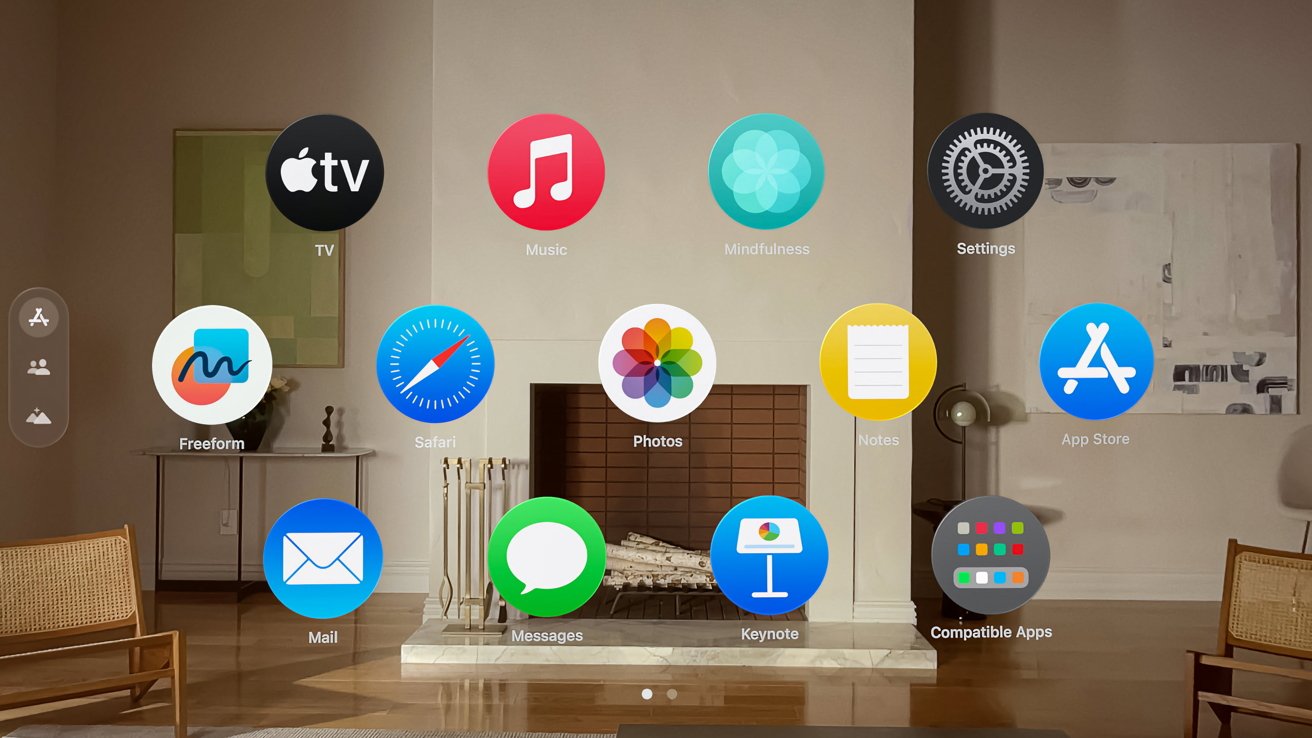

The Home Screen looks like a mix of tvOS and watchOS. Round icons arranged in a grid have a 2.5D effect that appears when interacting or looking at them.

Cameras capture the room around the user and pass that through to the internal displays. With the headset on, the user is able to see a perfect representation of the room around them with the proper field of view and depth.

Objects and windows appear to have a physical presence with placement anchors, shadows, and transparency. ARKit plays a part in developing experiences, but 3D scenes have to be built with SwiftUI and RealityKit.

Gestures and control

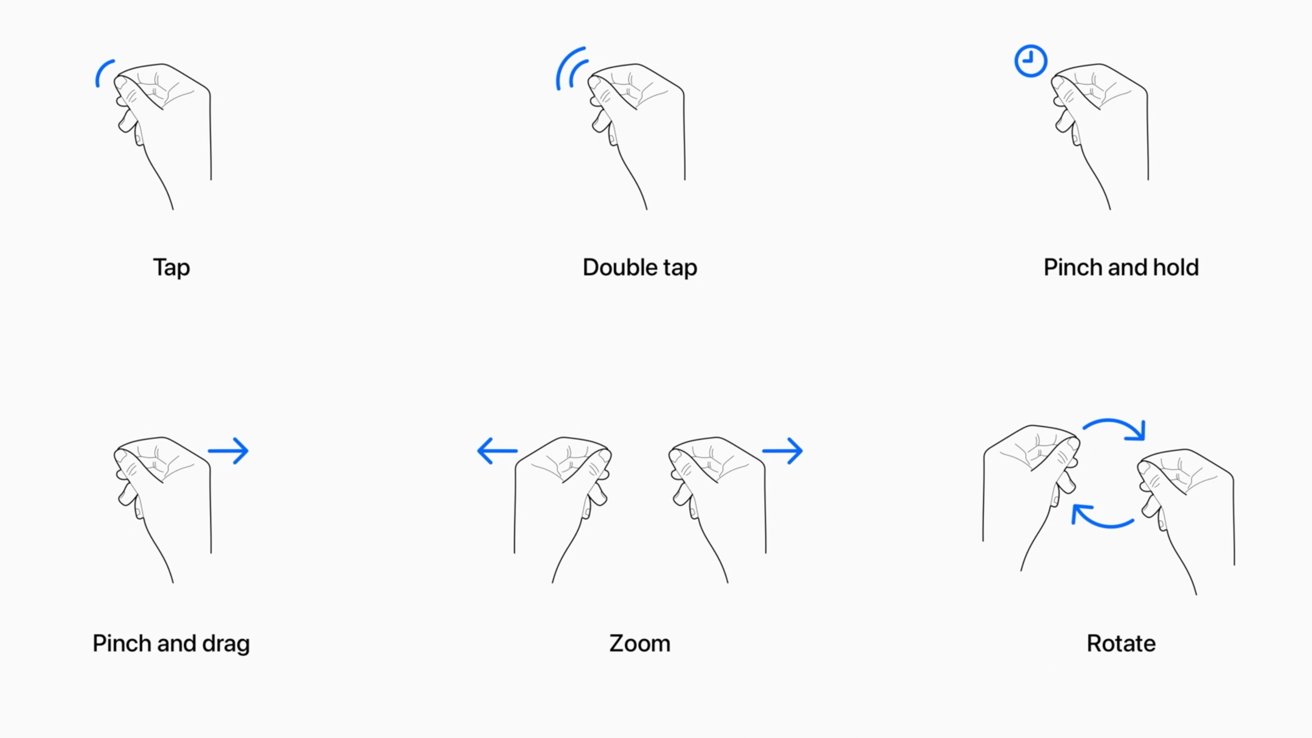

Hand gestures, eye tracking, and voice controls enable software manipulation without additional hardware. There is a virtual keyboard, but users can utilize Bluetooth keyboards and other input devices for improved interactions.

Eye tracking allows users to look at an object they wish to interact with. Selecting or grabbing objects is accomplished with a finger-pinching motion.

Look at a text field, then speak to fill it in. Bring up a virtual keyboard to ensure information is filled in with precision.

Objects will understand where the user is and what their hands are doing for further interaction. For example, placing a hand out might allow a virtual butterfly to land on a finger.

Force quitting apps is possible by holding down the Digital Crown and Top Button for a few seconds. Or, if it seems like tracking is off, recalibrate by pressing the Top Button four times in a row.

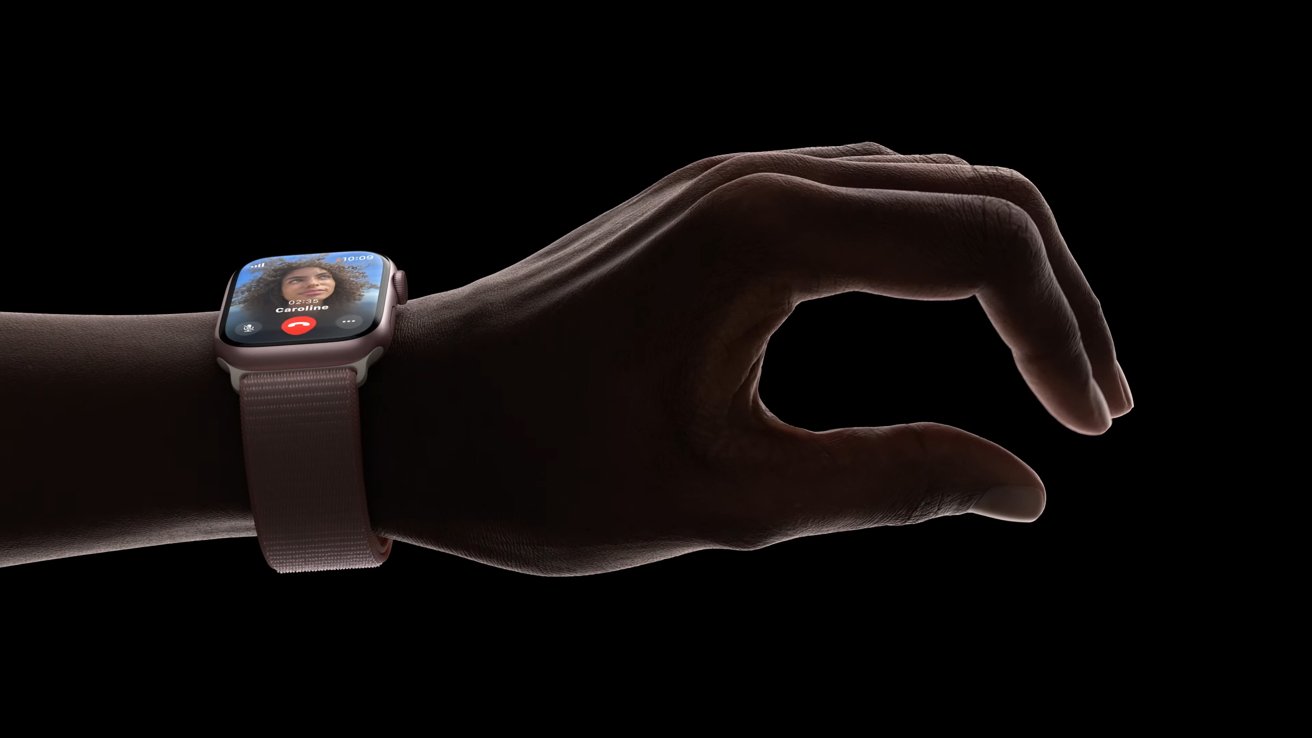

Apple seems to be preparing users for Double Tap on Apple Vision Pro by introducing a similar gesture for Apple Watch Series 9 and Apple Watch Ultra 2. When users perform a pinching motion with their thumb and another finger twice, it activates the active button on the Apple Watch.

Double Tap on Apple Watch is possible thanks to advanced algorithms and the S9 SiP processing multiple sensor signals. Apple Vision Pro is able to interpret the gesture using cameras, but Apple Watch might add another element to that control feature.

FaceTime

Even though the user will have their face obscured by a headset, FaceTime is still possible on Apple Vision Pro. You'll see other participants through floating windows while you'll be presented via a Persona — a digital representation of yourself.

A user's persona is created by scanning their face with the Apple Vision Pro cameras. The 3D model looks like the user but has an element of uncanny valley about it since it is fully computer generated.

Up to nine floating windows can be viewed during a FaceTime call. The usual limit of 32 participants is still there, but they won't all be visible.

Security

Apple Vision Pro has a new kind of biometric security — Optic ID. It scans the user's iris to authenticate logins, purchases, and more.

Everywhere a person would use Touch ID or Face ID is where Optic ID would work. Like those other biometric systems, iris data is encrypted and stored in the Secure Enclave so that it never leaves the device.

Apple also prevents apps from gaining valuable data from the device's cameras and sensors. While eye tracking and video passthrough occur during app use, it happens in a separate layer that can't be accessed for third-party data collection.

Like on iPhone or iPad, when it's time to authenticate something using Optic ID, the user confirms with a double-press of the Top Button. This prevents accidental authorization just by looking at a payment field.

Development

Apple has paved a clear path for developers to follow when creating apps and content for visionOS. However, some things won't work exactly as some may have hoped.

SwiftUI is the bedrock of coding for spatial computing. It has 3D-capable tools with support for depth, gestures, effects, and scenes.

RealityKit provides developers with the ability to generate 3D content, animations, and visual effects. It is able to adjust to physical lighting conditions and cast shadows.

ARKit can help an app understand a person's surroundings with things like Plane Estimation and Scene Reconstruction. This gives apps the ability to understand where a physical wall or floor is.

However, apps built with ARKit are not automatically rendered in 3D. In fact, if an AR app built for iPhone is run in visionOS, it'll appear as a 2D plane showing content as if it were on an iPhone.

Developers will have to start over with RealityKit for 3D content. So no, you'll not be catching Pokemon in your living room with full AR experiences just yet, at least not until Nintendo and Niantic develop an app specifically for that.

Note that Apple has avoided using industry-wide terms for its spatial computing platform. The 2D apps are called "windows," the 3D objects are called "volumes," and full environments are called "spaces" in place of terms like AR objects or VR experiences.

The App Store will appear in a version of visionOS for beta testers later in 2023. It will be loaded with iPad apps from developers that haven't opted out of being included.

visionOS release and its future

Spatial computing is new territory for Apple, and it will take time for the products and software to mature. Apple Vision Pro is just the start in 2024, with a standard Apple Vision model expected in 2025 at a lower price, followed by AR glasses sometime after.

Apple's launch timeline seems to be on schedule as one report states that retail employees will be getting training for the new hardware and operating system in the first months of 2024. Managers will be sent off to be trained and bring back information to train the retail team.

More information will become available as developers get their hands on visionOS SDKs to begin app development. The operating system will launch along with the headset in early 2024.

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

Peter Cohen

Peter Cohen

Chip Loder

Chip Loder

Evan Selleck

Evan Selleck